The far right has been using social media for years and the action leading to the riots last week has been festering for years, intensifying after Brexit. Some excellent work tracking the far right presence on social media has been undertaken over several years by Hope not Hate amongst others, establishing that they use social media including Facebook, Twitter and Youtube for ‘recruitment, propagandising at scale, disruption of mainstream debate, and the harassment of victims’. Last week’s events and their stoking were exposed by a number of analysts, principally Dr Marc Owen Jones. As Alan Rusbridger said in a very useful article:

Within 24 hours Dr Jones was able to assemble and publish a map of the key accounts which had spread disinformation. If Musk cared, his team could have done the same. We are entitled to think he doesn’t care.

Also see the work by Sunder Katwala and Paul Mason, and David Aaronovitch’s powerful summary. Meanwhile Channel Four’s report is worth watching:

Analysis of the far right in the US after the 2016 presidential election pointed to a highly-integrated constellation of far right social media sites, alternative media and television channels such as Fox News. Excellent analytical work identifying that was done at the time by Jonathan Albright and superb analysis tracking the far-right social media ecosphere has been undertaken over many years by Rebecca Lewis. Such constellations exist in the UK also, where Ofcom’s failure to regulate channels such as GB News, has allowed dog whistle politics to thrive while breaching broadcasting regulation and codes. It is unquestionably the case that politicians from Reform but also some on the right of the Conservative Party (who have been called out by other Conservatives such as David Gauke, Rory Stewart and Gavin Barwell) have stoked the flames.

The exploitation of hateful far right propaganda online has also been further amplified by bot networks and other outlets supported by Russia and its allies.

Facebook and the far right

I wrote a lot about this in my book on Facebook in 2020. The research I put together there contained a synthesis of excellent work by other academics, and my analysis was limited to Facebook and its associated companies. As I pointed out last week, even Facebook’s own founder

was forced to concede that Facebook’s algorithm prioritised posts which were controversial, resulting in ‘polarization and extremism’. Zuckerberg said, ‘when left unchecked, people will engage disproportionately with more sensationalist and provocative content’.

I argued in my book on Facebook that Facebook has aided the growth of far-right white nationalist and neo-Nazi movements across the world. Behavioural scientists working for the UK security service MI5 warned in 2019 ‘social media provides a forum in which people can explore their dark thoughts in the company of like-minded people’.

Extreme and polarising views have been amplified into the mainstream, with Facebook central to this.

Facebook was once a central communication platform for extreme right organisations like the English Defence League (EDL), Britain First and the extremist leader Stephen Yaxley-Lennon, also known as Tommy Robinson, as well as extreme right movements in mainland Europe. A Channel Four documentary on Facebook moderation procedures in 2018 showed a manager in the moderation team saying that his Facebook page, which had been found to have violated Facebook’s community guidelines, would have to be referred up because of the number of followers he had. (In other words, they didn’t want to stop earning money from engagement with his posts). When he was banned from Facebook and Instagram in 2019, Yaxley-Lennon had a following of one million people. Today, of course, Elon Musk has restored Lennon to Twitter and publicly acknowledged his tweets, helping amplify them.

The EDL and Britain First both used emotional videos and tabloid-style clickbait posts about immigration and Islam mixed with stories supportive of Britain’s troops and remembrance activities to draw in unsuspecting innocent supporters as part of their strategy of building support. This approach, as Donna Zuckerberg explains, is

a conversion process . . . often called ‘swallowing the red pill’ – a reference to the famous scene in the film The Matrix (1999) in which Morpheus (Laurence Fishburne) offers Neo (Keanu Reeves) a choice to return to blissful ignorance or learn the truth about their reality.

Disinformation may be inculcated on other sites like 4chan or Reddit and move to Twitter; then, if it is starting to get media traction, it moves to the mass market, which is Facebook, where it is placed in Facebook groups to ensure widespread sharing and acceptance. Rebecca Lewis and Alice Marwick describe red-pilling thus:

In far-right circles, one is redpilled when they begin believing a truth that is counterfactual to a mainstream belief, which may include white supremacy, Holocaust denial, the danger that immigration posits for white Americans, the oppression of men by feminists, and so forth.

In March 2019, Facebook finally banned white nationalist groups and propaganda from its site, and specific named groups and individuals were subsequently banned. The decision came two weeks after a white nationalist terrorist attacked two mosques in Christchurch, New Zealand, killing 50 worshippers. He had announced the attack on the 8chan message-board and live-streamed it on Facebook. Facebook tried to stop viewing of the video, stating it was viewed live fewer than 200 times and about 4,000 times in total before being removed. In the first 24 hours, Facebook removed about 1.5 million videos of the attack globally, with 1.2 million blocked at upload. In April 2019, Facebook banned the British extreme-right groups the BNP, EDL and Britain First. Later that month, a Facebook executive confessed to the UK Parliament that Face- book’s systems had failed to identify the video for blocking immediately because in events before this ‘we had not seen content from the actual angle of the shooter or the attacker’. This atrocity led to the international efoort to stamp out violent extremism and its online promotion, in The Christchurch Call.

At the time Facebook presented political news and opinion in its News Feed in the context of a flow of personal news and engagement, gossip, sport and celebrity commentary, which means users may encounter views that they would otherwise avoid. Understanding the difference between exposure to news and consumption of such information is important, with social media’s interactivity and engagement by users providing one element of that understanding. Sharing of news items by friends and family members trumps selection by partisan identification. What happens, said social media researcher Alice Marwick in 2018, is that ‘virtually every story is augmented with someone’s opinion’. She says that scholars and journalists are only just ‘beginning to understand the myriad impacts of social sharing’. In seeking to develop a ‘sociotechnical’ model of media effects, she says, ‘fake news’ must be understood as part of ‘a larger media ecosystem’. It is not simply a problem of pre-existing polarisation, of online advertising or algorithmic sorting, of a more sus-tained extreme right politics or right-tilting media environment: all of these things matter.

Attention has increasingly switched to the closely integrated alt-right news network, which includes a wide variety of online publications and news stations, websites and Facebook groups and advertising identified shortly after the 2016 election by Dr Jonathan Albright. Albright demonstrated that while Facebook certainly performed an amplifying and enraging function, the real issue was the sourcing of the original output from within the highly integrated alt-right media ecosystem of real-time propaganda. We should remember that Facebook was the primary source of news for only 7% of Donald Trump voters, compared with Fox News which was the primary source for 40% of them. In the UK, there are clear attempts now to build something similar.

Russia

Facebook conceded in September 2017 that Russian sources had spent about $100,000 on Facebook advertising in the run up to the 2016 elec- tion. They handed over 3,000 election advertisements paid for by Kremlin- linked actors, including by the Russian ‘troll factory’, the Internet Research Agency. Facebook estimated that 10 million people might have seen the advertisements. Further research by Dr Jonathan Albright subsequently showed that these advertisements, which included classic voter suppression and racially divisive advertising, might have been shared hundreds of mil- lions of times, although before he could complete his research, Facebook removed access to the data sets on which he had been working. Instagram is not excluded from this. Dr Albright identified 170 Instagram accounts spreading Russian propaganda compared to 120 Facebook pages. Facebook owned up that Russian ‘memes’ might have reached 126 million people, some spread by trolls and ‘sock-puppets’.

In February 2018, Special Counsel Robert Mueller handed down indictments to several Russian operatives in a case against the Internet Research Agency amongst others which stated, ‘the defendants and their co-conspirators also created thematic group pages on social media sites, particularly on the social media platforms Facebook and Instagram’. These weren’t just advertisements: some stimulated people into action, such as marches and rallies. In July 2018, Mark Zuckerberg confirmed that Facebook had known since 2015 about Russian attempts by the group Advanced Persistent Threat (APT) 28 to abuse the platform.18 As far as Russian interference in Brexit is concerned, there has been no Mueller equivalent. A Minority Staff report for the US Senate Committee on Foreign Relations found that

the picture of potential Russian meddling in the June referendum vote has only begun to come into sharper focus as subsequent elections around the world revealed common elements – false or inflammatory stories circulated by bots and trolls.

Facebook subsequently confirmed to the UK House of Commons that there were Russia-linked ads during the Brexit referendum campaign.

Russia’s objective is to sow disorder and create confusion about what to believe. It is no surprise that Russain backed fake media outlets and disinformation machinery has joined in to spread lies and disinformation during the last week.

Facebook Groups

Facebook has been used in a systematic way by a range of extremist organisations to recruit new members by leading them through a process of engagement, whereby more and more extreme content is recommended to them. One of the ways this has been done is through Facebook Groups. Some of these may purport to be local information or community groups. Dr Jonathan Albright explained in 2018:

Inside these political groups—numbering anywhere from tens of thousands to hundreds of thousands of member users—activities are perfectly obscured. However, the effects and consequences of these activities can be significant. The individual posts, photos, events, and files shared within these groups are generally not discoverable through Facebook’s standard search feature or through the APIs that allow content to be retrieved from public pages. Yet once the posts leave these groups, they can gain traction and initiate large-scale information-seeding and political influence without being easily traced.

Dr Renee Diresta, technical research manager at the Stanford Internet Observatory, wrote also back in 2018 of how extremists develop their views:

They usually report that their initial exposure started with a question, and that a search engine took them to content that they found compel- ling. They engaged with the content and then found more. They joined a few groups, and soon a recommendation engine sent them others.

Academic research has shown how conspiracy theories spread fast on Facebook and other platforms and how some groups may be more prone to believing conspiracy theories, fake news, disinformation and so on. Fact-checking has no impact on these groups. As Diresta noted,

When Facebook tried adding fact-checking to misinformation, research- ers found, counterintuitively, that people doubled down and shared the article more when it was disputed. They don’t want you to know, readers claimed, alleging that Facebook was trying to censor controversial knowledge.

While others were focused on Facebook’s ‘fake news problem’, DiResta was already warning about the radicalisation effects of Facebook Groups:

If you join a Facebook Group for a particular topic, it will naturally serve you other Groups, Pages, and news content related to that topic. Join a couple more, and it’ll look at the people who are common to the groups, decide that you are probably something like them, and then suggest other Groups based on groups that they are in.

These groups have spread their tactics from the USA to the UK and other countries.

Back to Today

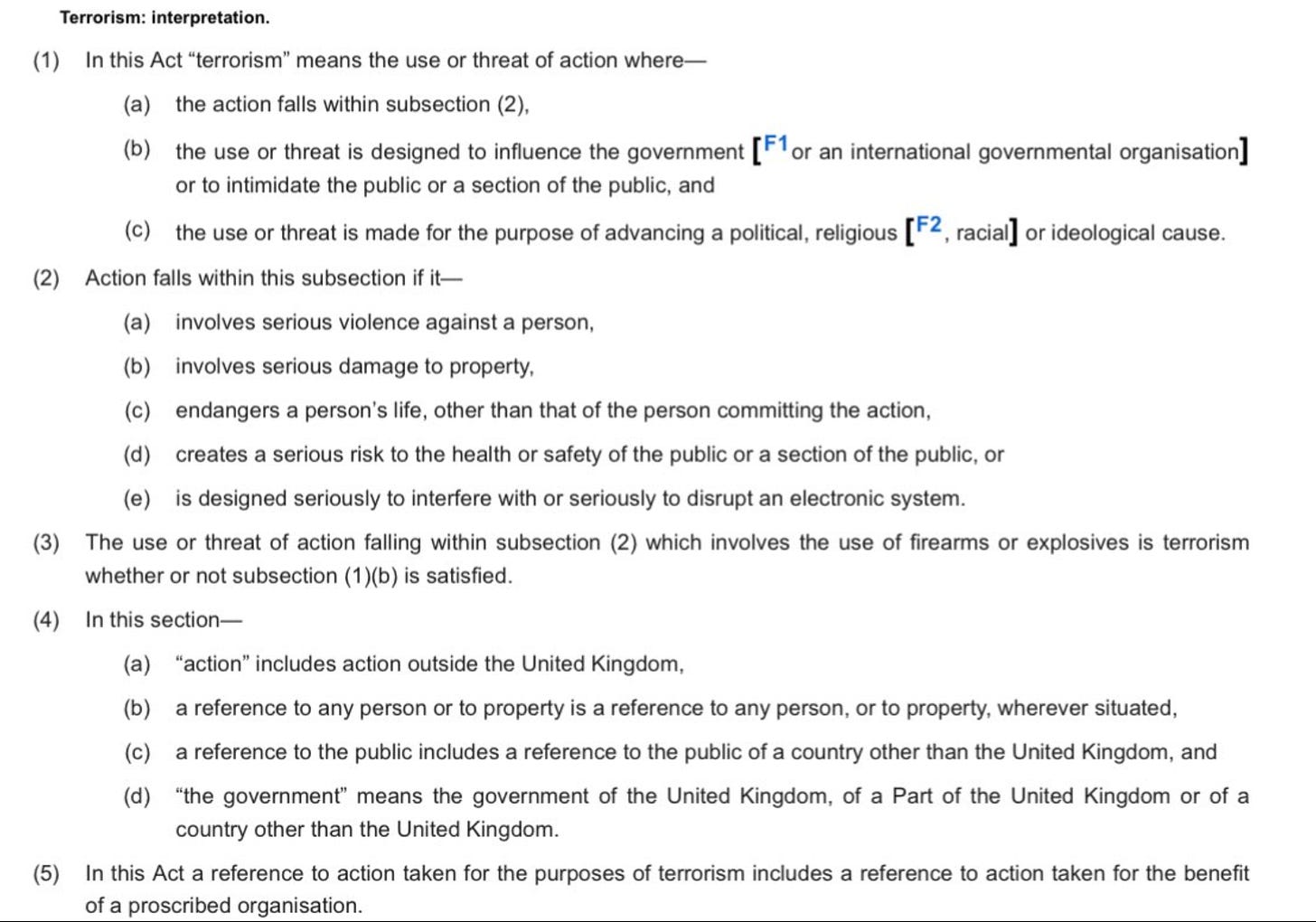

What is different today is the disaggregated nature of the riots coordinated by the far right. As Nick Lowles of Hope not Hate and Sunder Katwala of British Future have pointed out, the EDL as such doesn’t really exist these days and instead these are riots coordinated online on a range of platforms by a number of different individuals. Paul Mason has pointed out, with the aid of Searchlight magazine, how these particular terrorist riots were promoted. I call this ‘networked terrorism’. Chris Cook of The FT posted up on Twitter over the weekend the legal definition of terrorism and bluntly that is what the police are dealing with:

Sunder rightly says:

To strengthen the common ground, the liberal left and the Labour government should counter perceptions that it closes down debate too quickly. Scrutiny of contested issues – including policing, immigration or integration – must be part of democratic debate, while insisting that hatred, violence and extremism merit no respect.

Sunder also notes the need for serious action on social media platforms:

More pressure is needed on the social media giants. Five years ago, the major platforms were shocked by the Christchurch mosque massacre in New Zealand into taking more seriously the real-world consequences of online hate. There was significant progress – on rules and standards and setting boundaries against hatred. That has gone into reverse. Elon Musk’s takeover of Twitter (now called X) saw a commitment to once again providing a voice for groups dedicated to hatred and violence – such as Patriotic Alternative and Britain First. At the same time, the capacity for staff at Twitter to respond in real time during an emergency was cut. Action against social media platforms is unlikely without clearer threats of intervention – from national regulation or multilateral coalitions.

Of course, Elon Musk over the weekend amplified the tweets of the far right, including Yaxley-Lennon, and said that civil war in the UK was inevitable. Action against the owner of a platform who himself indulges and reinforces the far right will be complex but needs attention backed by the threat of criminal action and enforcement and the threat of imprisonment if he comes back to the UK having failed to clean up his platform. As Sathnam Sanghera pointed out, this is someone legitimised by a British Prime Minister in the bizarre fanboy interview by Rishi Sunak less than a year ago:

We now know that Musk is a White nationalist sympathizer and enabler, who has adopted the usual Broligarch tactic of negotiating to settle criminal lawsuits with regulators.

Meanwhile Carole Cadwalladr has pointed out the diversity of platforms used by the far right to organise and coordinate and of course post their own self-generated film footage of their su-called protests and ‘investigations’ of ‘what the mainstream media won’t tell you’:

This “alternative information ecosystem” – which includes Telegram, Bitchute, Parler and Gab – flows often invisibly beneath the mainstream media or even social media landscape. It has proved to be a breeding ground for far-right, conspiracy and extremist ideologies that this week collided and mobilised people on to the streets.

We should probably add Facebook-owned WhatsApp and Google-owned Youtube to this. We certainly shouldn’t leave TikTok out of the channels of radicalisation either. TikTok, seens as a national security threat in the US, and created by Chinese entrepreneurs, needs greater understanding by politicians in the UK. What is happening on social media is a national security issue and should be treated as such. This is incitement to terrorism, provoking criminal physical action intended to terrorise communities and particular minority groups within our society. It is networked terrorism.

It has of course been aided by establishment politicians within the Cionservative Party leaning in to populism or allying themsleves ot the populist agendas of Reform and others. The kind of people David Aaronovitch calls ‘gobs on legs’. In the past, the Conservative Party was led by people who knew where to draw the line between articulating concerns on immigration and national identity, understood that societal norms and issues of law and order needed constant attentuion, and stood up against the populists in their ranks. Since 2016, and since the growth of social media and its algorithms of outrage, and the decline of national and local newspapers. and the new right-wing channels which Ofcom has failed to police, more and more Conservatives have embraced the language of the far right. Some, like David Lidington, David Gauke, Gsvin Barwell, and others like Nick Bourne and Peter Fox in Wales, understand and challenge this and know where the boundaries should be:

There is a further issue here. The far right in the UK wants to see a ‘free speech’ standard based on what they think applies in the US. In fact, unlawful speech, has always been regulated, as Cass Sunstein says, even in the United States, even under the First Amendment to the Constitution (though who knows where the US Supreme Court is going). The First Amendment is designed to prevent Congress passing laws that prevent free speech, not to ensure that everyone has a right to have their views carried on major platforms. As Renee Diresta has said, the rightn of free speech does not extend to the right of free reach. In other words, there is no obligation on platforms to cover unacceptable posts. Ben Wagner argued, however, that one of the issues raised by Facebook’s global operations was that it seeks in effect to promote assumed American speech norms across the world. There is also evidence to suggest that in countries that have stronger rules on hate speech - for example Germany – Facebook has been forced to invest more heavily in content moderation.

In effect, in terms of free speech, the far right wants to turn the debate in the UK back to how things were before the first race relations acts. As a society, we are complicit: we have largely allowed big monopolistic American and Chinese-owned platforms to become the digital public sphere in the UK.

I came to thinking about the regulation of social media quite late myself, in 2016, after I had left politics, when after Brexit and Trump’s election I began to learn more about Facebook’s algorithms and ask who was regulating them. My social media learning curve, as I know from conversations with them, has been similar to that of some of the legislators in the UK and elsewhere engaged in in-depth investigations in the period since 2016. Social media cannot simply be addressed through Online Harm regulation. It takes us to deeper issues of algorithmic recommendation engines and their creation and monitoring, competition policy and anti-trust legislation, and national security including cyber security. These are integrated issues.

Meanwhile, if you want to do something practical right now, donate to Hope not Hate, whose exposure of the far right and coverage of their sickening terrorist behaviour has been outstanding.